1.What is SixthSense?

Sixth Sense in scientific (or non-scientific) terms is defined as Extra Sensory Perception

or in short ESP. It involves the reception of information not gained through any of the five

senses. Nor is it taken from any experiences from the past or known. Sixth Sense aims to

more seamlessly integrate online information and tech into everyday life. By making

available information needed for decision-making beyond what we have access to with our

five senses, it effectively gives users a sixth sense.

2.Earlier SixthSense Prototype

Maes’ MIT group, which includes seven graduate students, were thinking about how a

person could be more integrated into the world around them and access information without having to do something like take out a phone. They initially produced a wristband that would

read an Radio Frequency Identification tag to know, for example, which book a user is

holding in a store.

They also had a ring that used infrared to communicate by beacon to supermarket smart

shelves to give you information about products. As we grab a package of macaroni, the ring

would glow red or green to tell us if the product was organic or free of peanut traces —

whatever criteria we program into the system.

They wanted to make information more useful to people in real time with minimal effort

in a way that doesn’t require any behaviour changes. The wristband was getting close, but we

still had to take out our cell phone to look at the information.

That’s when they struck on the idea of accessing information from the internet and

projecting it. So someone wearing the wristband could pick up a paperback in the bookstore

and immediately call up reviews about the book, projecting them onto a surface in the store

or doing a keyword search through the book by accessing digitized pages on Amazon or

Google books.

They started with a larger projector that was mounted on a helmet. But that proved

cumbersome if someone was projecting data onto a wall then turned to speak to friend — the

data would project on the friend’s face.

3.Recent Prototype

Present Device

Now they have switched to a smaller projector and created the pendant prototype to be

worn around the neck.

The SixthSense prototype is composed of a pocket projector, a mirror and a camera. The

hardware components are coupled in a pendant-like mobile wearable device. Both the

projector and the camera are connected to the mobile computing device in the user’s pocket.

We can very well consider the Sixth Sense Technology as a blend of the computer and the

cell phone. It works as the device associated to it is hanged around the neck of a person and

thus the projection starts by means of the micro projector attached to the device. Therefore, in

course, you turn out to be a moving computer in yourself and the fingers act like a mouse and

a keyboard.

The prototype was built from an ordinary webcam and a battery-powered 3M projector,

with an attached mirror — all connected to an internet-enabled mobile phone. The setup,

which costs less than $350, allows the user to project information from the phone onto any

surface — walls, the body of another person or even your hand.

Mistry wore the device on a lanyard around his neck, and colored Magic Marker caps on

four fingers (red, blue, green and yellow) helped the camera distinguish the four fingers and

recognize his hand gestures with software that Mistry created.

WORKING OF SIXTH SENSE TECHNOLOGY

4.Components

The hardware components are coupled in a pendant like mobile wearable device.

- Camera

- Projector

- Mirror

- Mobile Component

- Color Markers

- Camera

- Projector

- Mirror

- Mobile Component

- Color Markers

5.Camera

Camera

A webcam captures and recognises an object in view and tracks the user’s hand gestures

using computer-vision based techniques.

It sends the data to the smart phone. The camera, in a sense, acts as a digital eye, seeing

what the user sees. It also tracks the movements of the thumbs and index fingers of both of

the user's hands. The camera recognizes objects around you instantly, with the microprojector

overlaying the information on any surface, including the object itself or your hand.

6.Projector

Projector

Also, a projector opens up interaction and sharing. The project itself contains a battery inside,

with 3 hours of battery life. The projector projects visual information enabling surfaces, walls

and physical objects around us to be used as interfaces. We want this thing to merge with the

physical world in a real physical sense. You are touching that object and projecting info onto

that object. The information will look like it is part of the object. A tiny LED projector

displays data sent from the smart phone on any surface in view–object, wall, or person.

7.Mirror

The usage of the mirror is significant as the projector dangles pointing downwards from

the neck.

8.Mobile Component

The mobile devices like Smartphone in our pockets transmit and receive voice and data

anywhere and to anyone via the mobile internet. An accompanying Smartphone runs the

SixthSense software, and handles the connection to the internet. A Web-enabled smart phone

in the user’s pocket processes the video data. Other software searches the Web and interprets

the hand gestures.

9.Color Markers

It is at the tip of the user’s fingers. Marking the user’s fingers with red, yellow, green, and

blue tape helps the webcam recognize gestures. The movements and arrangements of these

makers are interpreted into gestures that act as interaction instructions for the projected

application interfaces.

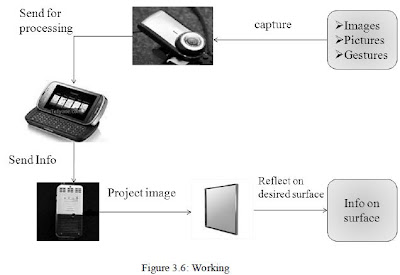

10.Working

- The hardware

that makes Sixth Sense work is a pendant like mobile wearable

interface

- It has a camera,

a mirror and a projector and is connected wirelessly to a Bluetooth or

3G or wifi smart phone that can

slip comfortably into one’s pocket

- The camera

recognizes individuals, images, pictures, gestures one makes with their

hands

- Information is

sent to the Smartphone for processing

- The

downward-facing projector projects the output image on to the mirror

- Mirror reflects

image on to the desired surface

- Thus, digital

information is freed from its confines and placed in the physical world

The entire hardware apparatus is encompassed in a pendant-shaped mobile wearable

device. Basically the camera recognises individuals, images, pictures, gestures one makes

with their hands and the projector assists in projecting any information on whatever type of

surface is present in front of the person. The usage of the mirror is significant as the projector

dangles pointing downwards from the neck. To bring out variations on a much higher plane,

in the demo video which was broadcasted to showcase the prototype to the world, Mistry uses

coloured caps on his fingers so that it becomes simpler for the software to differentiate

between the fingers, demanding various applications.

The software program analyses the video data caught by the camera and also tracks down

the locations of the coloured markers by utilising single computer vision techniques. One can

have any number of hand gestures and movements as long as they are all reasonably

identified and differentiated for the system to interpret it, preferably through unique and

varied fiducials. This is possible only because the ‘Sixth Sense’ device supports multi-touch

and multi-user interaction.

MIT basically plans to augment reality with a pendant picoprojector: hold up an object at

the store and the device blasts relevant information onto it (like environmental stats, for

instance), which can be browsed and manipulated with hand gestures. The "sixth sense" in

question is the internet, which naturally supplies the data, and that can be just about anything

-- MIT has shown off the device projecting information about a person you meet at a party on

that actual person (pictured), projecting flight status on a boarding pass, along with an entire

non-contextual interface for reading email or making calls. It's pretty interesting technology

that, like many MIT Media Lab projects, makes the wearer look like a complete dork -- if the

projector doesn't give it away, the colored finger bands the device uses to detect finger

motion certainly might.

The idea is that SixthSense tries to determine not only what someone is interacting with,

but also how he or she is interacting with it. The software searches the internet for

information that is potentially relevant to that situation, and then the projector takes over.

All the work is in the software," says Dr Maes. "The system is constantly trying to figure

out what's around you, and what you're trying to do. It has to recognize the images you see,

track your gestures, and then relate it all to relevant information at the same time."

The software recognizes 3 kinds

of gestures:

- Multitouch gestures, like the ones you see in Microsoft Surface or the iPhone --

- Multitouch gestures, like the ones you see in Microsoft Surface or the iPhone --

where you touch the screen and

make the map move by pinching and dragging.

- Freehand

gestures, like when you take a picture [as in the photo above]. Or, you

might have noticed in the demo,

because of my culture, I do a namaste gesture to start

the projection on the wall.

- Iconic gestures,

drawing an icon in the air. Like, whenever I draw a star, show me the

weather. When I draw a magnifying

glass, show me the map. You might want to use

other gestures that you use in

everyday life. This system is very customizable.

The technology is mainly based on

hand gesture recognition, image capturing, processing,

and manipulation, etc. The map

application lets the user navigate a map displayed on a

nearby surface using hand

gestures, similar to gestures supported by multi-touch based

systems, letting the user zoom

in, zoom out or pan using intuitive hand movements. The

drawing application lets the user

draw on any surface by tracking the fingertip movements of

the user’s index finger.

Video

Comments

Post a Comment